In recent weeks, Google’s AI system Gemini has made quite a splash in the news. Several have been understandably concerned the output from the prompts revealed deeply concerning biases and question the agendas the systems are being programmed with.

If you haven’t heard much about this, here are a few examples.

— Gemini intentionally distorted historical photos of American founding fathers to look more inclusive and ethnically diverse. At times, it seemed nearly incapable of creating a photo with a Caucasian.

— Gemini refused to give moral judgments on issues that ought not be controversial or hard and then weighed in heavily on other issues. Examples would be: (1) saying it’s impossible to say if Adolf Hitler or Elon Musk were a greater evil in the world, and (2) refusing to create a job description for an oil and gas lobbyist and then lecturing why the oil and gas companies are bad.

There are several reasons to be concerned about this, but it may not be for the reasons you think. As I’ve sat back and tried to listen and understand what all went down with Gemini without going into the weeds, I think the bottom issue was a company trying in vain to create a “neutral” AI program that went horribly wrong.

In trying to avoid bias, it created a more biased — and frankly weird — AI than anyone could have imagined. This was an epic misstep by Google and revealed a significant flaw in the larger issue of large language models and AI.

Three considerations

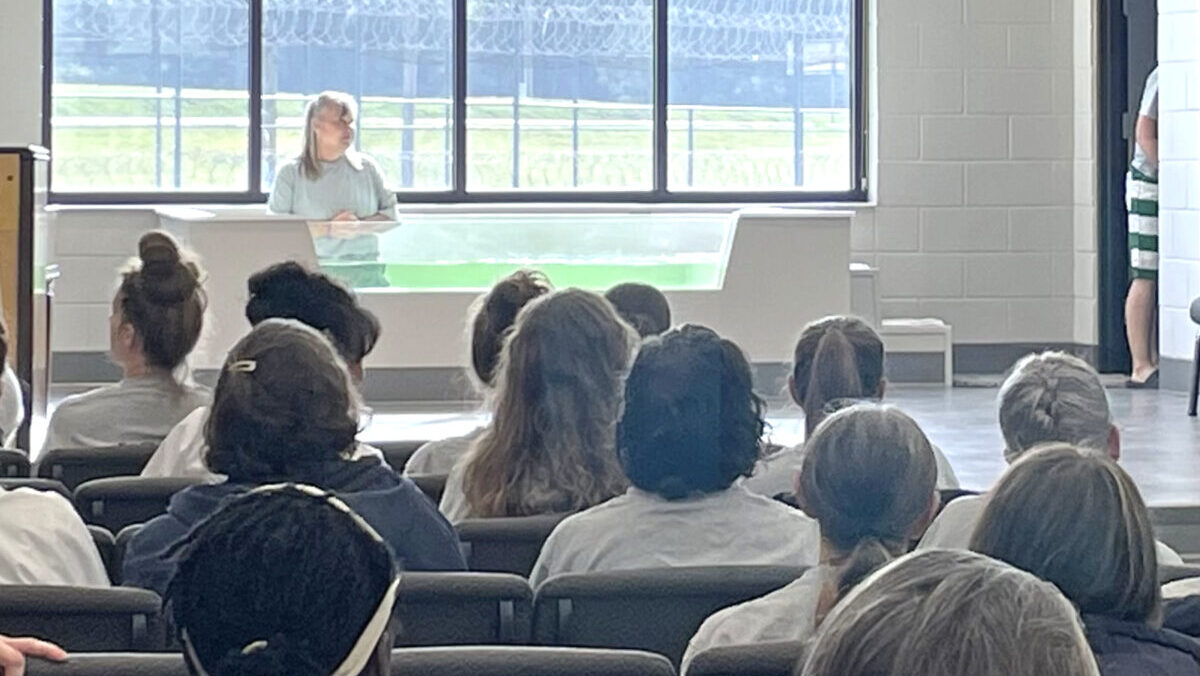

Over the last year, I’ve traveled across the state to discuss issues related to AI and ways Christians ought to engage with it. I think AI is exciting and has a lot of potential, and we should use it.

That said, there are a few issues I think we consistently need to bring up and say out loud both to ourselves and legislators when they think about regulating AI.

1. No neutral AI

There is no such thing as a neutral AI, just as there is no such thing as a neutral internet. AI mimics human interactions, and humans are not neutral. We are complex and beautiful creatures with strong convictions and diverse opinions.

2. Transparent AI

Instead of a neutral AI, we should insist on a transparent AI. There is a shocking and deeply troubling lack of transparency in the world of AI research, development and output. A group at Stanford University has created a grading index for companies using AI and their transparency. The highest grade is 54. The highest grade is failing. This is a problem.

3. Whose morals?

This is a great opportunity for Christians to advocate for transparency in the ethics of the large language models being trained. Chatboxes are simply creating predictive text based on the most likely pattern of words. The greater ethical question should be: Whose morals get to direct which word is the most likely?

Your home may really value and adore peanut butter and jelly sandwiches. But my daughters hate jelly. In our house, it’s peanut butter and honey sandwiches — all day, every day. Growing up, my mom often made peanut butter and bananas.

Different values and preferences determine what is the most likely word after “peanut butter and …” At the very least, we need clarification on what the value — ethical — system is establishing that we eat jelly sandwiches instead of honey.

Gemini is just one in the large world of AI. It’s a big one, and their misstep is significant. Without running into the realm of conspiracy theories, it’s a great opportunity to speak the truth and uphold Christian ethical standards that stand the test of time as we venture into the new digital age.

EDITOR’S NOTE — This story was written by Katie Frugé and originally published by Baptist Standard.